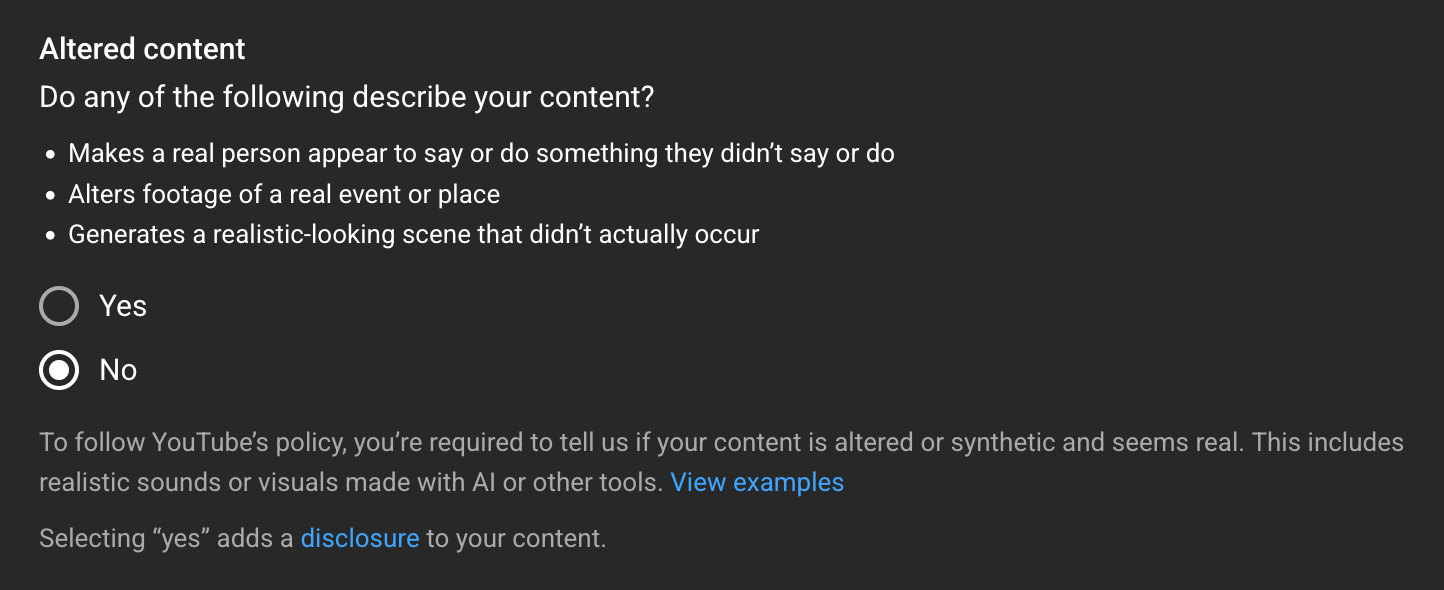

Altered content: synthetic but seems real

When posting my photos online I used to say (proudly): no AI tool was used in the process of making this photo. I can no longer state that.

Do any of the following describe your content?

- Makes a real person appear to say or do something they didn't say or do

- Alters footage of a real event or place

- Generates a realistic-looking scene that didn't actually occur

To follow YouTube's policy, you're required to tell us if your content is altered or synthetic and seems real. This includes realistic sounds or visuals made with AI or other tools. View examples

(Source: YouTube)

One of the most wide-spread and referenced "definition" of non-real content comes from YouTube - as seen above.

Every time you upload a new video you have to answer this with a Yes or a No.

What about photos?

With my digital camera the line between "not touched by AI" and "altered" becomes blurry.

For a moment, let's take smartphones out of the equation - because we all know how far they can go to "help you" capture that moment.

Let's take a traditional digital, though not "smart" camera.

You press the button, what happens?

The sensor captures the incoming light - and in modern hardware - immediately applies some "rules" or "filters".

You get "raw" data, and - again - for a moment let's believe it's not altered in any way. This is the file that is as close to reality as it can get.

And then you move it to your editing tool.

The pixels themselves may NOT get changed - no new "artifacts" are introduced - but what about colors?

The moment you apply an "automatic adjust" it's very likely that an AI optimized setting or filter gets applied to your image - making it AWESOME looking!

You are very happy with the result and can't wait to post it online.

Yes, the "scene" is still real, but can you claim that you did not use any AI tool during the process?

OK, at this point, YouTube's "definition" still stands:

- the photo contains a real, physically present place

- the "event" (e.g. a sunset) did indeed took place, it happened

- no additional, "synthetic" piece of artifact was added to the photo

Great!

What about AI generated photos?

Taking your beautifully captured sunset photo, you upload it to a gen-AI service and say to your favorite AI assistant:

- Hey, please take this photo and generate a new photo that is as close to the original as possibile...

- Oh, and please make the colors "pop" more! 😄

This is where it gets interesting.

The result will be almost identical to the original photo, with a relatively static, "nature" content no human can call out the differences.

But the "photo" was not taken in real life.

THAT sunset never happened.

Where to go from here?

Without any physical evidence, GPS coordinates, exact date and time stamps it's impossible to "prove" that the photo was actually taken in real life.

And the scary bit is this: who cares anymore?